During the course of upgrading my ESX 4.1 Server farm. I notice that the behaviour of ESXi Management Network Interface is different from the Service Console of ESX 4.1. With that, I decided to further test and understand the concept and deployment of multiple Management Interface of ESXi 5.0.

Test Objectives

The test objective is to test the redundancy of ESXi Management Network Interface for vCenter connectivity and SSH. Objective is to recover ESXi remotely should a portion of the network failed.

The test objective is to test the redundancy of ESXi Management Network Interface for vCenter connectivity and SSH. Objective is to recover ESXi remotely should a portion of the network failed.

Test Environment

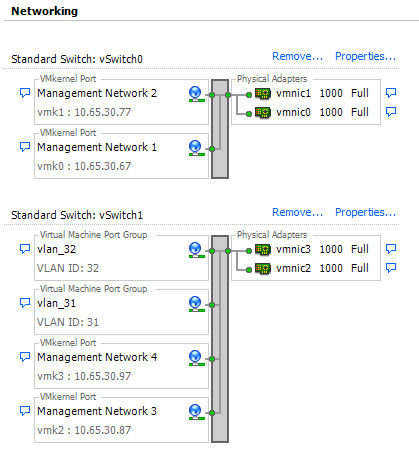

- Setup 2 vSwitch each with 2 Management Network Interface.

- All Management Network IP is in the same IP subnet.

- vCenter is in the same Management Network IP subnet.

- 1 test machine is located in the same Management Network subnet to perform ping test.

- All setup is done on the same IP subnet so that other network issues as routing and the single vmk default gateway consideration is taken off from this testing.

Ping Test Captured from the Test Machine

Test Machine Ping Test Result

Conclusion of Test Case 1

When vmnic0 and vmnic 1 uplink failed. All vmnics will failed.

Tried to connect to Management Network 3 and 4 IP using vCenter also failed. SSH to Management Network 3 and 4 also failed.

This came as a surprise to me. My expectation is that Management Network connected to vSwitch0 should fail, but IP address connected to vSwitch1 will be successful. But in this case, I nearly felt from my chair.

But after understanding that in my DUCI Network Management Adapters is still point to vmnic0 and vmnic1, I have a second thought. Because the DUCI Network Management Adapters is still pointting to vmnic0 and vmnic1, I cannot fault ESXi that why the Management Network IP did not which to vmnic3 and vmnic 4.

In this case, it seem to me that when the uplink specified in the Network Management Adapters failed, all vmk will fail. This posted a great concern as in vMotion, FT, iSCSI will also failed even if they are in a different vSwitch and NIC cards. This also posted a great concern in designing and how should we protect the Mangement Network IP.

This highlighted some weakness in ESXi Management IP design as compare to ESX 4.0 Management Console.

When vmnic0 and vmnic 1 uplink failed. All vmnics will failed.

Tried to connect to Management Network 3 and 4 IP using vCenter also failed. SSH to Management Network 3 and 4 also failed.

This came as a surprise to me. My expectation is that Management Network connected to vSwitch0 should fail, but IP address connected to vSwitch1 will be successful. But in this case, I nearly felt from my chair.

But after understanding that in my DUCI Network Management Adapters is still point to vmnic0 and vmnic1, I have a second thought. Because the DUCI Network Management Adapters is still pointting to vmnic0 and vmnic1, I cannot fault ESXi that why the Management Network IP did not which to vmnic3 and vmnic 4.

In this case, it seem to me that when the uplink specified in the Network Management Adapters failed, all vmk will fail. This posted a great concern as in vMotion, FT, iSCSI will also failed even if they are in a different vSwitch and NIC cards. This also posted a great concern in designing and how should we protect the Mangement Network IP.

This highlighted some weakness in ESXi Management IP design as compare to ESX 4.0 Management Console.

Recovery of of Test Case 1

1) Identify the network problem and restore back the network link.

2) I have tried to work in the DUCI and bind vmnic2 to the Network adapter group.

I have tried rebind using vmnic4, but there are even more errors, can casues more problems. After a few tries and re-setup of the lab (because I have no choice but to reset the network config in DUCI back to default) I realised that after binding vmnic2 to the Management Network adapter with errors you just have to take it and do a reboot of the ESXi System.

After reboot, I manage to take back control of the ESXi in my vCenter.

Conclusion of Recovery Method of Test Case 1

In this recovery test I found another problem with ESXi recovery of the Management Network IP. Because you can't shell into ESXi 5.0 anymore, you will not be able to run commands like vcli to check the current status of the network card binding.

After binding of vmnic2, vmk0 and vmk1 is still not up, you will have to do a reboot in order to bind the new vmnic2. If there are VM running during this moment, and we have to do a reboot of the system, there must be a way for us to shutdown the VMs. But because there are no shell in DUCI, you can't shutdown and VMs. This posted a even bigger problem!

So if you are hit into this situation, the best way and safest way is still to restore back the physical network link.

No comments:

Post a Comment